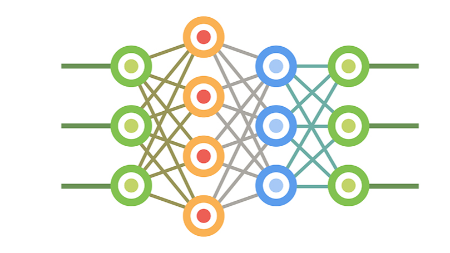

Deep neural networks (machine learning) in healthcare .

Machine learning describes a family of algorithms capable of identifying complex patterns without explicit human instruction. Classical methods work well with modest datasets, while deep neural networks excel with larger volumes and can process images, text, or clinical data. Applications in medicine now include image-based diagnosis, automated documentation, and decision support, with research showing performance that can parallel or exceed clinician accuracy. Training relies on iterative error correction through back-propagation. Challenges persist, including fragmented and unstructured data, concerns about interpretability, and the need for transparent model explanations such as LIME and saliency maps. As datasets grow and computational tools become more accessible, prospective trials are beginning to test whether real-time AI assistance can improve clinical outcomes.

Unlock the Full original article

You have access to 4 more FREE articles this month.

Click below to unlock and view this original article

Unlock Now

Critical appraisals of the latest, high-impact randomized controlled trials and systematic reviews in orthopaedics

Access to OrthoEvidence podcast content, including collaborations with the Journal of Bone and Joint Surgery, interviews with internationally recognized surgeons, and roundtable discussions on orthopaedic news and topics

Subscription to The Pulse, a twice-weekly evidence-based newsletter designed to help you make better clinical decisions

Exclusive access to original content articles, including in-house systematic reviews, and articles on health research methods and hot orthopaedic topics

Or upgrade today and gain access to all OrthoEvidencecontent for as little as $1.99 per week.

Already have an account? Log in

Are you affiliated with one of our partner associations?

Click here to gain complimentary access as part your association member benefits!